20. November 2018

Technology sneak peek: advances in real-time ray tracing

“We broke a lot of new ground on this project: a fully ray-traced dynamic scene with multiple shadowed and textured area lights, true area light sampling, ray-traced translucent and clear coat shading models, reflection shadow denoising, and screen space real-time ray-traced global illumination.” says François Antoine, Director of Embedded Systems at Epic Games, who acted as VFX supervisor on the project.

“The project consists of two components, the cinematic ‘attract mode’ and the interactive lighting studio, both of which use the exact same vehicle asset and rendering feature set. Actually the cinematic is filmed in real-time within the interactive lighting studio itself, using Unreal Engine’s Sequencer.”

The demo builds on the advances showcased at GDC 2018 with “Reflections”, a video story featuring three highly reflective, ray-traced characters from the Star Wars series rendered in real time with Unreal Engine. “Speed of Light” points up the advances that have been made since then in both performance and speed of ray tracing with Unreal Engine.

In addition to using a more comprehensive RTX featureset than was used in “Reflections,” the demo utilized just two NVIDIA Quadro RTX cards as opposed to the four Volta GPUs required for the “Reflections” demo. This alone is a testimony to the pace at which NVIDIA’s hardware is advancing real-time ray tracing as a feature for future versions of Unreal Engine.

Imagining the demo

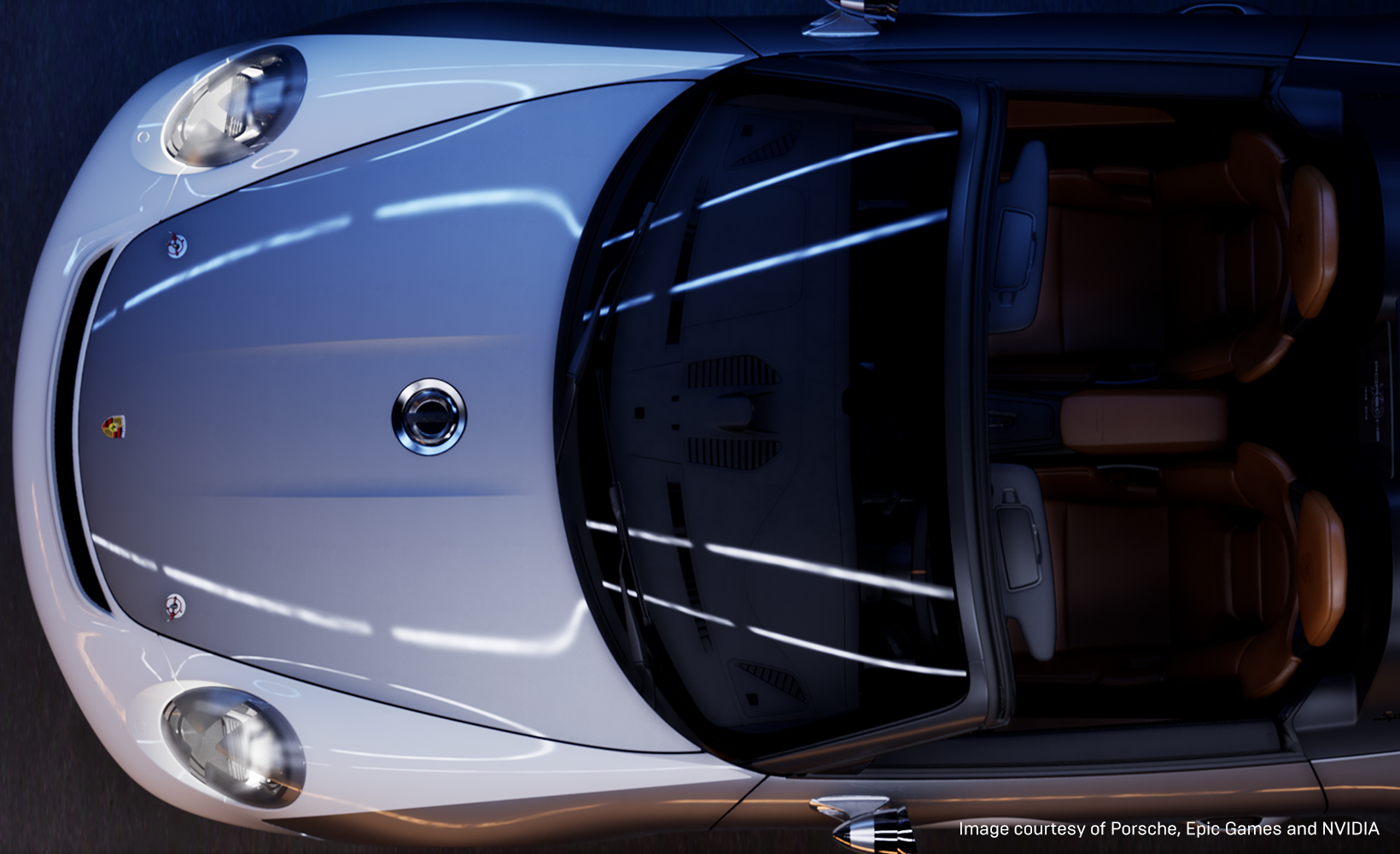

The idea for the demo came about when Antoine was kicking around ideas with CTO Kim Libreri on how Unreal Engine could make the most of the features offered by the NVIDIA Quadro RTX card. “When we first got wind of Turing’s ray-tracing featureset, we immediately thought of an automotive-focused project,” says Antoine. “Cars are all about smooth, curvy reflections, what we call ‘liquid lines’ in industry speak.

“We got to talking about a way to visually manifest the essence of the Porsche Speedster along with the massive advancement in lighting fidelity that RTX and Turing would bring to the real-time industry,” he continues. “The words ‘speed’ and ‘light’ came to mind immediately.”

The team came together at SIGGRAPH 2018 to present a tech talk about the innovative technology behind the cinematic and interactive piece that comprise the “Speed of Light” demo. “The cinematic transitions seamlessly to the interactive piece with exactly the same area lights, car asset, and poly count,” says Antoine. “The level of fidelity for both the cinematic and the lighting studio is impressive given the scene is fully dynamic and runs on first-generation hardware.”

Putting it all together

Creating the demo called for more than just great hardware. The team started with Porsche concept car’s actual CATIA CAD manufacturing files and brought them into Unreal Engine using Datasmith, a suite of import tools that is included with Unreal Engine as of version 4.24.

The initial import came in at 40 million polygons, which the team selectively tessellated down to just under 10 million polygons. This proved not to have been a necessary step as the 4x drop in polygon count actually had minimal impact on rendering performance. “It turns out that NVIDIA RTX’s rendering performance isn’t affected as much by poly count as rasterization rendering is, which is a real plus for visualization of large datasets,” says Antoine. “Instead, the number of raycasts per pixel is a better way to gauge performance impact.”

The materials in the demo are highly varied, with both the interior and exterior of the concept car fully represented and a focus on making reflective and translucent surfaces look as accurate as possible. To accomplish this, the team utilized a great deal of real-world reference, even going so far as to disassemble actual Porsche car parts to get a better understanding of their internal structures and how they interact with light.

This approach was particularly useful in analyzing the speedster’s tail lights. “This new-found understanding, coupled with the more physically accurate behavior of ray tracing, allowed us to achieve much more realistic taillights than we previously could,” he says.

In addition, the team had color management specialists at X-Rite scan several Porsche material samples, such as leather and paint swatches, to their proprietary AxF format, which is supported by Unreal Engine. These captures yielded high-resolution texture and normal maps for the car paint’s clear coat layer, the solid white paint, and the leather seat texture.

“While this was our first time experimenting with AxF,” says Antoine, “it proved useful in validating our material look-dev as well as contributing directly to final pixels.”

Real-time rendering at the speed of light

During real-time playback, the demo uses both raster and ray-trace techniques. First, a raster base pass is calculated, then ray-trace passes are launched. “With this approach, the demo can calculate complex effects that would be very hard to achieve with traditional raster techniques,” says Juan Canada, Lead Ray Tracing Programmer at Epic Games.

Ray tracing works by shooting a finite number of rays and taking samples of the light/color produced by those rays. If ray tracing were used alone, it would require a huge number of rays and samples to get a photoreal image, and thus would take a very long time to render. But if too few samples are taken in an effort to reduce render time, the image appears dotty or spotty.

To keep the number of rays and samples to a minimum while still yielding a photoreal image, ray-trace technology uses denoising, a method of reducing the variance between adjacent pixel colors. A denoiser is not just a simple function that blurs or averages pixels, but rather a sophisticated algorithm that takes many factors into account. In fact, the quality of a ray tracer’s denoiser can make or break the renderer’s usefulness in terms of speed vs. quality.

For the “Speed of Light” demo, NVIDIA sought to improve on the denoiser used for the “Reflections” project. “The denoiser can now handle both glossy and rough materials with a single sample, often matching pretty closely the reference obtained with hundreds or thousands of samples,” says Ignacio Llamas, Senior Manager of Real-Time Rendering Software at NVIDIA. He credits software engineer Edward Liu at NVIDIA as being instrumental in the development of this advanced denoising technology.

One example of fast, effective denoising can be seen in the way light streaks reflect on the car. Traditionally, RTX rendering would utilize a simple texture on a plane to generate this effect. In “Speed of Light”, these streaks are generated from animated textured area lights with ray-traced soft shadows. This mimics how these light streaks would be created in a real photo studio environment, with light affecting both the diffuse and specular components of the car’s materials, creating much more realistic light behavior.

In addition to showcasing improvements in rendering of reflective surfaces in real time, the demo represents a giant step forward for real-time, ray-traced translucency. “It works by tracing primary rays from the camera, selecting to intersect first only the translucent geometry in the scene, and doing so up to the first opaque hit, which is computed by rasterizing the GBuffer,” explains Llamas. “For every translucent layer found along the ray, we then trace reflection and shadow rays. These reflection rays may themselves hit additional translucent layers and will also trace additional shadow rays.” He adds that the current technology for ray-traced translucency doesn’t include denoising at this time, but it is planned for future iterations of ray-traced translucency.

Screen-space ray-traced dynamic diffuse global illumination made lighting the car more efficient. Fewer lights were needed than before, and they performed with more nuanced behavior than was previously possible.

The benefits of these advances in ray tracing technology made it possible for the “Speed of Light” demo to play in real time. “The average framerate of the cinematic sequence on the Quadro RTX 6000 is around 35-40 FPS at 1080p, which is higher than what we achieved on a DGX Station,” says Llamas.

Advancing the technology

Real-time ray tracing seemed like an impossible achievement just a few years ago, but is now within reach. With the advances in ray tracing technology will come easier setup and usage for users. There will be no need for reflection probes, light baking, or complex lighting methods to fake realism with raster rendering. Lighting and reflections will work off physical properties, eliminating the need for work-arounds.

“There’s a lot more innovative tech there than you’ll notice, and that is a good thing,” says Antoine. “The tech shouldn’t attract your attention; it should just make the image look more plausible.”

Several factors have played a role in the advancements. The first is that NVIDIA has produced graphics cards capable of tracing rays at a speed that enables high-quality images to be produced in real time.

The second is DXR technology and an API that provides an environment where ray-tracing architecture can be developed quickly. Advances in denoising technology have also played a role.

Epic Games is excited about the future of real-time ray tracing. “It’s a revolution that changes the economics of creating the highest fidelity imagery and experiences,” says Ken Pimentel, Senior Product Manager at Epic Games. “You’ll no longer be forced to choose being physically accurate and impossibly gorgeous - you’ll just get both in Unreal Engine.”

Want to be part of the revolution of real-time rendering? Download Unreal Engine today.